My wife and I were in a hospital room a few weeks ago waiting to go into the operating room for her c-section for the birth of our twins. She had to answer a bunch of questions, fill out a seemingly endless pile of paperwork and listen to various doctors and nurses describe what was going to happen that day along with any risks involved.

The anesthesiologist went through a laundry list of potential side effects from the anesthesia he would be administering to her for the surgery. He talked about potential headaches, numbness, nausea, vomiting, itchiness, and a whole host of other potential issues. He then walked us through the probabilities for each scenario. One was 1 out of every 400 people. Another was 1 out of every 5,000 and so on.

It would have been easy to freak out about these statistics and I’m sure many people do. But this doctor had a great closing line before he exited the room to give us some perspective on the situation. He said, “But don’t worry too much about all of these risks. The riskiest thing you did today was the drive over here. You’re far more likely to get injured in a car accident than see something go wrong in the operating room.”

I love the way he framed this because perception and reality are often at odds when it comes to understanding risk.

Research studies have shown that the way doctors frame outcomes can actually change their patient’s mind about going through with a surgical procedure or not. Patient decisions are much different when the doctor says “you have a 90% chance of survival” versus “you have a 10% chance of mortality.” People will also judge a risk to be higher if they’re told it’s “1 out of 100” than if they’re told it has a “1 percent chance of happening.”

It’s not only framing but our perception of risk that matters.

For example, after 9/11, people were terrified to fly so more people took to the road and drove to their destination. One study calculated that nearly 1,600 more people died on the road in car accidents in the year following 9/11 because of this uptick in car travelers.

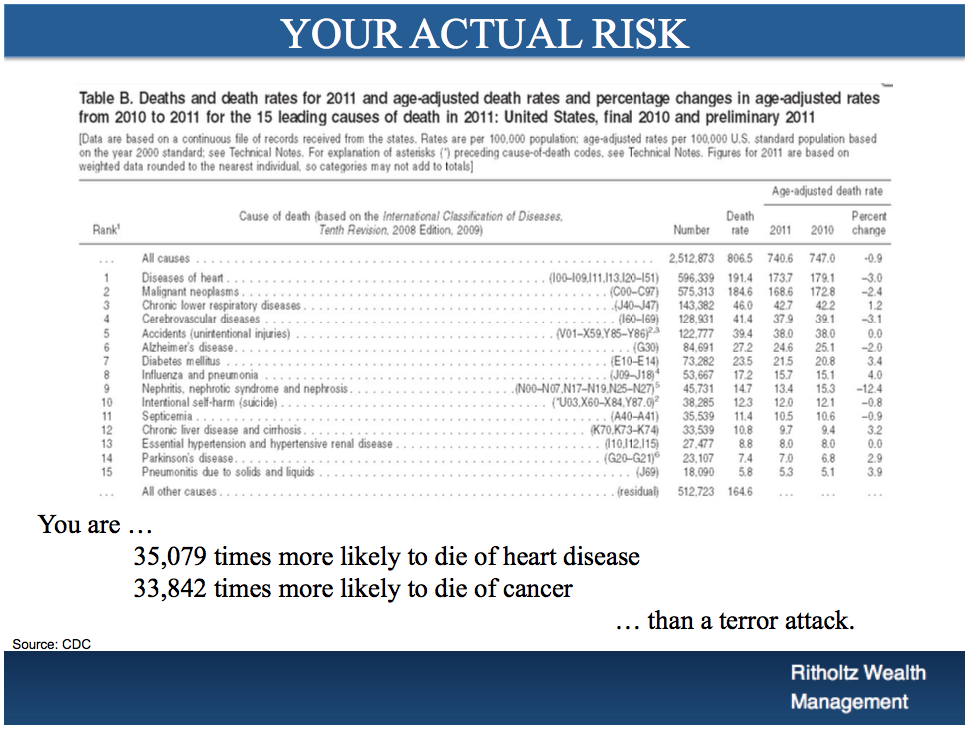

My colleague Barry Ritholtz has a great presentation he does at conferences where he tries to put risk into perspective. Here’s one of his slides on the leading causes of death:

Cars kill more people than handguns. Obesity kills something like 100,000 people a year. But people are far more worried about relatively minor risks because they feel more tangible in the moment.

People are scared of terrorist attacks because we see them on the news every time they occur. It’s not hard to find people who are worried about being on a plane or in a big city because of the potential for a terrorist attack but you rarely, if ever, hear people actively worrying about heart disease or a car accident.

So why do we have such a hard time identifying perceived from actual risks?

Part of it is based on culture. Then there’s the fact that fear sells. The media wouldn’t play up bad news so much if people weren’t attracted to these types of stories. But a lot of it has to do with how our brains are hardwired.

In his book, The Science of Fear: How the Culture of Fear Manipulates Brain, Daniel Gardner describes some of our pitfalls when it comes to framing risk properly:

Once a belief is in place, we screen what we see and hear in a biased way that ensures our beliefs are “proven” correct. Psychologists have also discovered that people are vulnerable to something called group polarization — which means that when people who share beliefs get together in groups, they become more convinced that their beliefs are right and they become more extreme in their views. Put confirmation bias, group polarization, and culture together, and we start to understand why people can come to completely different views about which risks are frightening and which aren’t worth a second thought.

It’s also much easier to simply be afraid of that with which we can easily recall to memory. Gardner uses Daniel Kahneman’s two systems of thought to explain:

You may have just watched the evening news and seen a shocking report about someone like you being attacked in a quiet neighborhood at midday in Dallas. That crime may have been in another city in another state. It may have been a very unusual, even bizarre crime — the very qualities that got it on the evening news across the country. And it may be that if you think about this a little — if you get System Two involved — you would agree that this example really doesn’t tell you much about your chance of being attacked, which, according to the statistics, is incredibly tiny. But none of that matters. All that System One knows is that the example was recalled easily. Based on that alone, it concludes that risk is high and it triggers the alarm — and you feel afraid when you really shouldn’t.

There are plenty of parallels to investing here. Investors are constantly fighting the last war by trying to hedge out risks after they’ve already occurred. Short-term fears often disrupt the most well-intentioned long-term plans. Most normal investors don’t pay attention to the financial markets until something bad happens. Then they’re all ears and looking to do something, anything to allay their fears.

Anyone who has followed the markets since the Great Recession should be well aware of the fact that fear and pessimism are a far better selling tactic than calm and optimism. The negative view always sounds more intelligent than the positive one these days.

Risks obviously do exist, in both the markets and other aspects of life. But it makes sense to slow down a little before overreacting to the latest news headlines. There’s never been a better time to be alive but people have a hard time understanding this when we have the mentality of acting first and thinking later.

Source:

The Science of Fear

Further Reading:

Disconfirmations, Framing & Satisficing

Now here’s what I’ve been reading lately:

- Protecting yourself from sequence of return risk (Of Dollars and Data)

- Prepping for double intense carnage (A Teachable Moment)

- Hot stocks can make you rich but they probably won’t (NY Times)

- Josh Brown on what evidence-based investing means to him (ETF.com)

- What’s your value proposition? (Big Picture)

- How to save money for your children (Humble Dollar)

- 7 reasons you shouldn’t start your own business (Monevator)

- The cat backtest (Bloomberg)

- 5 choices you will regret forever (Travis Bradberry)